Artificial intelligence (AI) is an unavoidable concept in the digital age, if not for its pervasive application, then by virtue of the fact that it provides a technological solution to information overload and the quest for context. Kaleido Insights defines AI as an umbrella term for the variety of tools and methods used to mimic cognitive functions across three areas: perception/vision, speech/language; and learning/analysis.

While full-scale enterprise AI adoption remains more aspirational than actual, a 2021 PWC survey found 54% of business executives believe AI will play a significant role in improving their businesses’ decision-making processes.

Business interest in AI has been compounded by its promises for efficiency in the form of speed, accuracy, agility, and access to insights embedded in “dark data”—a reference to the estimated 80% of unused enterprise data. Interests (and critiques) also span disciplines, from ethics to education, technical to design, policy to biology. Yet, there is a critical link missing from AI discourse, a link connecting its code-based statistical composition and how we humans make decisions.

When inferences become our references

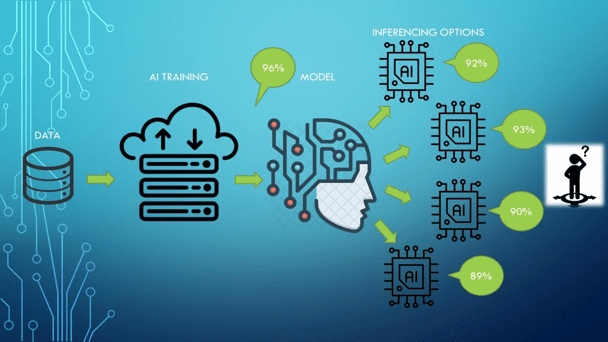

Current machine learning is only as “intelligent” as the data we feed it. It produces an output based on the data we expose it to, and the parameters we define. But just because the machine says X is 90% likely, does not mean it is so. The machine infers statistical likelihood based on data, which itself is typically limited to explicit (codifiable) knowledge.

Yet when humans receive that machine-generated output– be it a recommendation, diagnostic, worthiness score, or otherwise– from an AI-powered application, little regard is given to this grey area. By grey area, we refer to the fact that an AI output is an inference, but the use of that output becomes a reference.

The computer told me to!

Inferences are often mentioned in discussions on AI Explainability, or the capacity for AI itself to articulate explanations substantiating why it produced a specific output, but the implications of AI inferences goes far beyond AI Explainability. As we offload decision-making to machines, how will we– consumers, citizens, patients, workers, executives, doctors, politicians, regulators, etc.– become indebted to systems?

An eye-opening visualization of systemic impacts of AI inferencing

To clarify, consider this summary of inferencing made possible off of eye tracking data. When inferences become references, we assume the individual’s [personality traits, mental health, (pink labels) etc.] are what the algorithm tells us they are.

“We don’t see things as they are, we see them as we are.” –Anais Nin

It is also important to see AI inferencing the context of the AI market, particularly AI marketing. Consumers, businesses, and governments alike are told AI has predictive value, capable of identifying issues before/better/faster than humans, or to which humans are otherwise blind. Compounding this allure is the implicit promise of knowledge, as AI vendors often sell AI as a flashlight–to find new customers; to gain insights into hidden patterns; to identify criminals… Indeed, our biases inform AI biases, as much in the implementation of AI as its inferences and how they are applied.

Questioning AI-generated inferences is not just about the reliability of recommendations. As the distinction blurs between what we reference as fact, vs. what the machine inferences based on data, countless impacts on humans, businesses, and society emerge.

- Transparency & Explainability: our ability to understand why AI generates the outputs that it does; or why certain data can corrupt AI models

- Erroneousness: consequences of wrong, bad, or incomplete AI inferences

- Compliance & Auditability: legal implications of AI inferences used for decision-making in regulated areas

- Governance & Accountability: who or what oversees AI and data sets; who is responsible for maintenance, impacts, and measuring its effects

- Discrimination & Disenfranchisement: exclusionary impacts of AI inferences, whether inadvertent or erroneous

- Safety, Health, Access: dangers, costs, and liabilities when AI inferences introduce unexpected risks

- Human Vulnerabilities: when AI’s “predictive” capabilities are used to manipulate human weaknesses, biases, and other vulnerabilities

- Triangulation & Missing Data: when inferences are used to fill in missing data without transparency or verification methods

- Digital Identities & Digital Frankensteins: impacts of incomplete or incorrect labels about individuals, as part of digital profiles based on inferencing

- Information Asymmetry: when inferencing is used to further big data assets and profiling to exert power over competitors or individuals

- Business Reputation: how all of the above areas can impact businesses’ reputations, customer loyalty, partner networks, etc.

- Emergence of New Data Sets: implications of emergent data sets (e.g. biometrics, genomics, etc.) on AI inferences used for decision-making

- Next Generation Workforce: when workers are increasingly removed from decision-making, whether through displacement via automation or lack of AI transparency

- Taxation on Knowledge: longer-term impacts of intellectual debt and the growth of theory-less knowledge

Discerning between inferences and what we humans reference is a critical tension for our time; the more we rely on big data, the more we rely on AI to reduce dimensionality to make sense of it.

To learn about this topic, impact areas, and how to apply this thinking to your AI initiatives, join us for a discussion with Sensemakers on June 16, 2021. Attend online at no cost by registering here.

Thumbnail Photo Source through Pixels